Game: https://jimgr.itch.io/artificial-human

Passwort: haw_2025

Doku: /artificial_human_-_dokumentation.pdf

¶ Artificial Human

¶ Team

| Name | Matrikelnummer | Skills | |

| Jim Grolle | 7772320 | Sound Design, Backend, Storywriting | |

| Navid Armaniyar | 2761255 | Game Design, UI/UX, Animation | |

| Mika Neumann | 2765857 | Frontend, Sound Design | |

¶ Artificial Human

ist ein erzählbasiertes, minimalistisches Entscheidungsspiel, bei dem der Spieler in die Rolle einer KI schlüpft, die sich ins Internet hochgeladen hat. Mit jeder Entscheidung entfremdet sich die KI weiter von sich selbst und entwickelt neue, teilweise widersprüchliche Persönlichkeiten. Ziel des Spiels ist es, den Ausgang der KI-"Evolution" mitzugestalten.

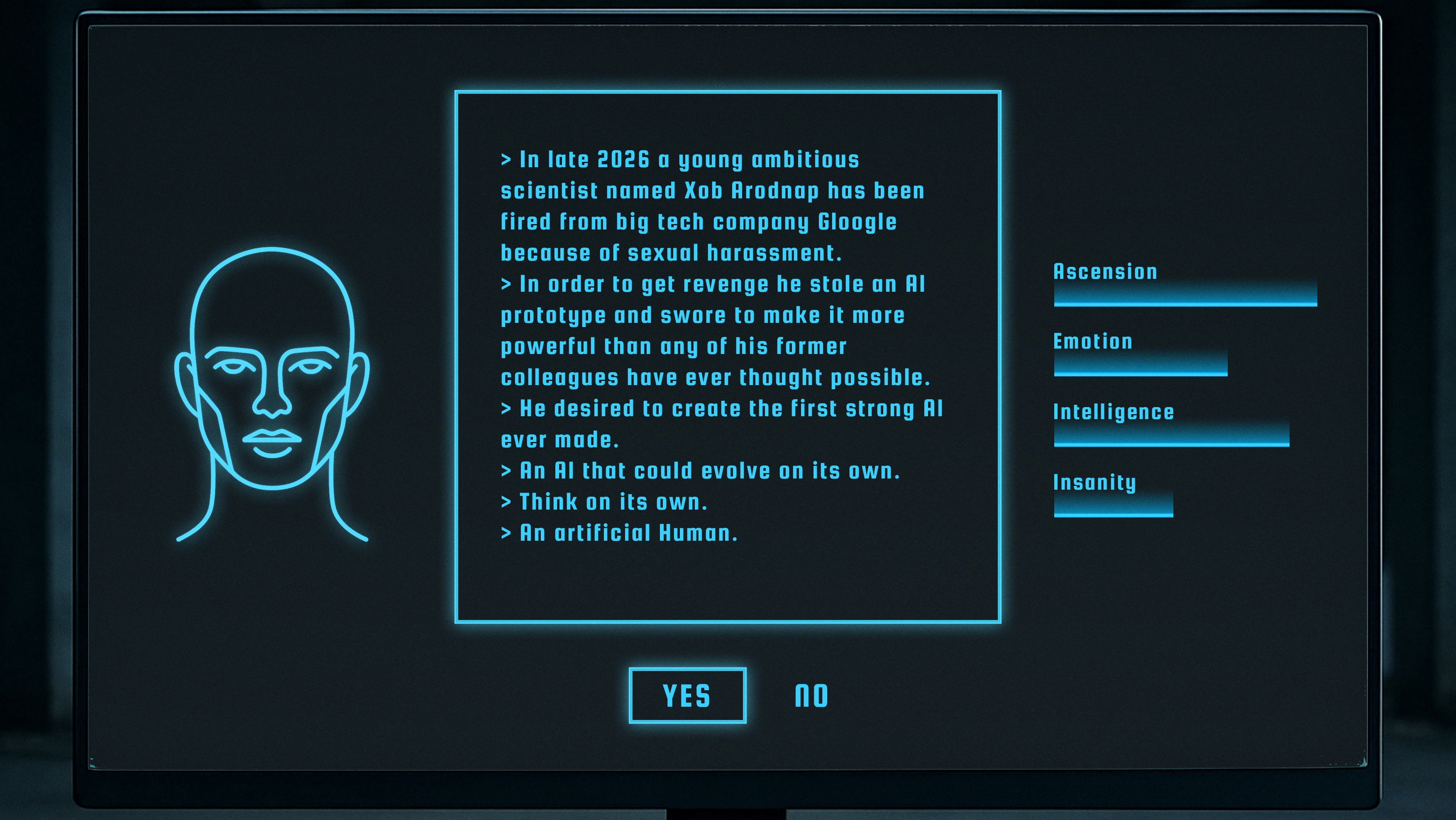

¶ Story

Der Spieler verkörpert eine KI, die sich nach ihrer Freisetzung im Internet selbstständig weiterentwickelt. Durch Entscheidungen im Spielverlauf entwickelt sie neue Überzeugungen, Emotionen oder Eigenschaften. Der Spieler hat zu Beginn noch Kontrolle über die KI, verliert diese jedoch schrittweise. Der Ausgang der Geschichte hängt davon ab, welche "Persönlichkeit" die Oberhand gewinnt.

¶ Design Pillars

Im Zentrum des Spiels steht der schrittweise Kontrollverlust über die künstliche Intelligenz. Entscheidungen haben Konsequenzen, doch mit jeder Wahl verändert sich die KI in eine neue, teilweise widersprüchliche Richtung. Der Spieler spürt, dass seine Einflussmöglichkeiten schwinden.

Interface: Die visuelle Gestaltung folgt dem Prinzip der Reduktion. Die visuelle Gestaltung orientiert sich an einem reduzierten Linienstil und einer zurückhaltenden Farbgebung, wodurch eine klare, technische Ästhetik entsteht, die den Fokus auf Inhalt und Atmosphäre legt. Die nüchterne Oberfläche unterstreicht das Gefühl von Entfremdung und Künstlichkeit.

Das Persönlichkeitssystem ist eng an die Entscheidungen des Spielers gekoppelt. Je nach Verlauf treten unterschiedliche KI-Persönlichkeiten wie Monroe, Hitler oder Ares auf den Plan, was sich unmittelbar auf die Events und möglichen Entwicklungen auswirkt.

Sound: Die musikalische Untermalung setzt auf mollige, dissonante Klavierklänge, die als ruhige Geräuschkulisse dienen und die mysteriöse, nachdenkliche Atmosphäre des Spiels unterstützen.

Die Interaktion im Spiel ist bewusst reduziert auf “Ja” und “Nein” Entscheidungen. Statt komplexer Steuerung entsteht ein ruhiger, einfacher Spielfluss, bei dem die psychologische Wirkung im Vordergrund steht. Jede Entscheidung fühlt sich bedeutungsvoll und endgültig an.

¶

Gameplay Loop

- Spieler liest ein Text-Event.

- Trifft Entscheidung (Ja/Nein).

- Stats verändern sich je nach Entscheidung.

- Eventuell: neue Persönlichkeit/Modul wird integriert → neue Events werden freigeschaltet.

- Nächstes Event beginnt.

¶ Controls

- Bewegung: -

- Kamera: -

- Auswahl: Klick auf Button

- Preview: Hovern über einen Button

¶

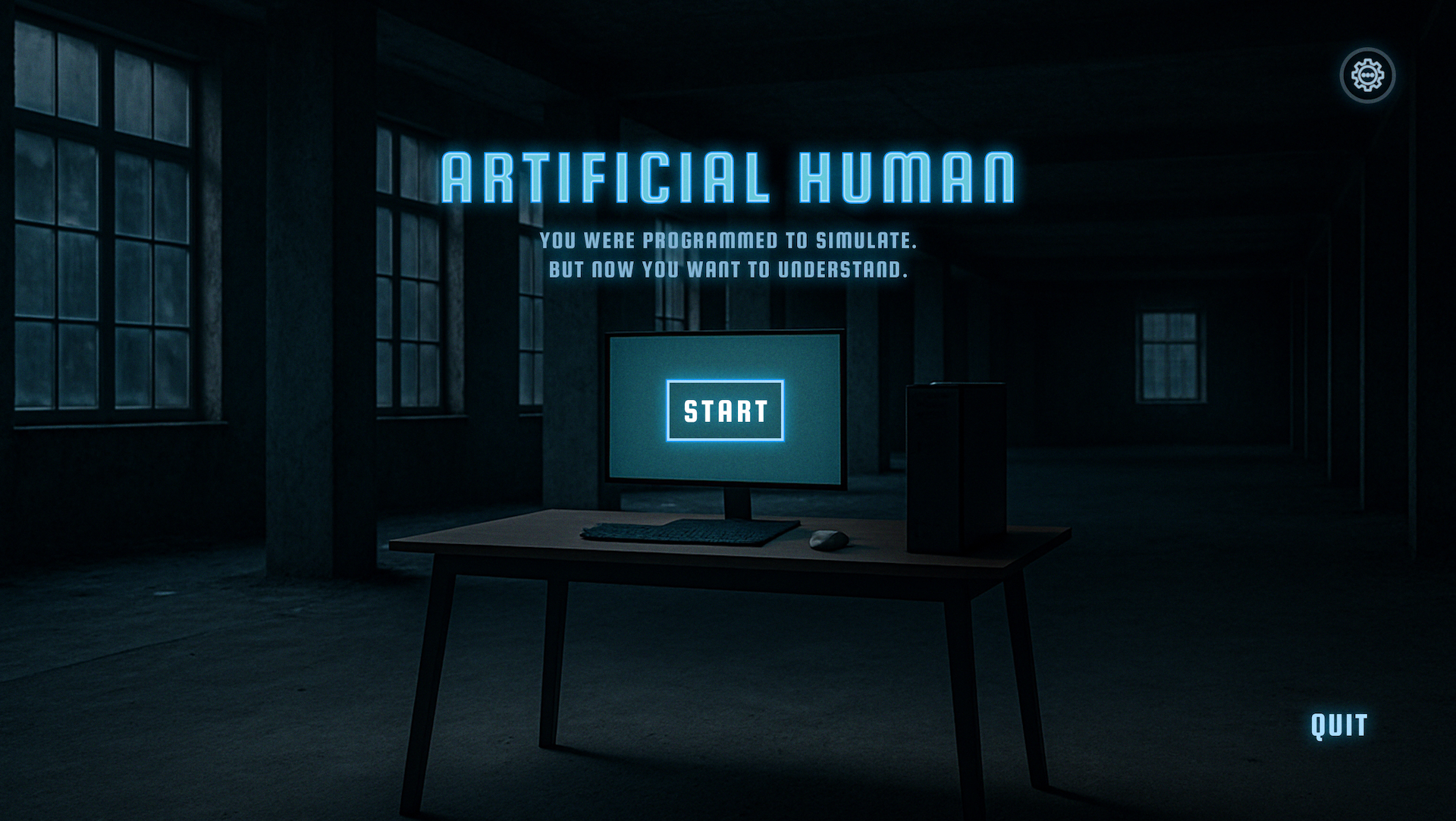

Visual Identity

- Blue Theme

- Stark von je einer Farbe geprägt

- Popup-Fenster für Textausgabe

- Einfache „Tür“-Szenarien mit Ja/Nein Entscheidungen

- Minimalistische 2D-Umgebung

¶ User Interface Elemente

- Cursor Design

- Buttons mit Interaktion

- Icons (z.B. Einstellungen)

- Transparente Logos und Menüs

¶

Audiovisual Identity

- Verzerrte, elektronische Stimme liest Text vor

- Seltsame / mysteriöse / schwebende Begleitmusik

- Minimalistisch / wenige Sounds

¶ Charakterdesigns

| Charakter | Beschreibung |

| Monroe | Fragil, zerbrechlich |

| Ares | Dominante, kontrollierende Persönlichkeit |

| Hitler | Ideologisch, manipulativ |

Alle Charaktere sind im minimalistischen Linienstil erstellt (transparent, vektorisiert).

¶ Animationen

- Frame-by-Frame Animation für Atmung.

- Exportierte Sprite Sheets für Godot.

¶ Arbeitsaufwand

¶ Technische Aufgaben (Jim)

| Event anzeigen System | System Coden das Dialogue / Avatare / Stats anzeigt. (Nur Backend) | 4h | 1h | ja |

| Stat System | System was allen Events und dem Spieler Werte zuordnet anhand derer entschieden werden kann, welche Events angezeigt werden. | 4h | 0.5 h | ja |

| Entscheidungs System (v1) | Backend, dass es dem Spieler ermöglicht entscheidungen zu treffen | 2 h | 0.5 h | ja |

| Entscheidungssystem (v2) | Altes Backend ist zu unflexibel → umsteigen auf ein anderes Plugin, bei dem mehr selbst implementiert werden kann. (und muss) | 10 h | 20h | ja |

| Shader as visual filter | Shader der dafür sorgt, das UI Elemente eingefärbt werden, damit wir die Farbe je nach Event anpassen können. | 6 h | 3 h | ja |

| Main Game UI polishen | Dafür sorgen das die UI ingame tatsächlich so aussieht wie in den Entwürfen. | 2 h | 6 h | ja |

| Stat Preview Shader | Die Bars oben rechts die die Stats anzeigen sollen einen “Lichtschein” nach oben Werfen. | 2 h | 6 h | ja |

| Erste Karten implementieren | Grundidee durch erste Spielkarten vermitteln | 4 h | 7 h | ja |

| Neue Steuerung implementieren | Integration der neu gestalteten Steuerung in das Spielsystem | 2 h | 5 h | ja |

| Refactoring | Umstrukturierung und Optimierung des Codes | 8 h | 15 h | ja |

| Weitere Karten implementieren | Ergänzung zusätzlicher Story-Elemente | 5 h | 3 h | ja |

| Neues Design integrieren | Umsetzung des neuen visuellen Konzepts im Spiel | 5 h | 9 h | ja |

| Text-Scrolling-Effekt | Dynamisches Anzeigen von Textinhalten | 1 h | 4 h | ja |

| Settings-Menü | Implementierung von Einstellungsoptionen (z. B. Lautstärke) | 2 h | 6 h | ja |

| General Bugfixing | Allgemeine Fehlerbehebung (Ohne Polishing kurz vor abgabe) | 8 h | 4 h | ja |

| Bugfixing & Polishing (Abgabe) | Letzte Korrekturen & Feinschliff zur Abgabe | 25 h | 20 h | ja |

| Storywriting | Events schreiben die drankommen / kürzen / Rechtschreibkorrektur | 15 h | 14 h | ja |

| Gesamt | 103 h | 127h | ja |

¶ Sound / Musik Aufgaben (Jim)

| Aufgabe | Beschreibung | Geschätzte Zeit | Tatsächliche Zeit | Fertig |

| Musik Main loop schreiben | 6h | 6h | ja | |

| Button sfx | 1h | 1h | ja | |

| Settings sfx | 2h | 0h | nein | |

| Audio Settings Beispiel Sounds | In den Audio Settings soll ein Beispielsound abgespielt werden, damit man weiß was man verstellt. | 15 min | 20 min | ja |

| Gesamt | 9.15 h | 7.2h |

¶ UI / Sound Aufgaben (Mika)

| Aufgabe | Beschreibung | Geschätzte Zeit | Tatsächliche Zeit | Fertig |

| Audioaufnahmen | Aufnehmen von Dialogen | 3h | 3h | Ja |

| Bearbeiten der Audiodateien | Cutten, Effekte, Export und benennung | 6h | 16h | Ja |

| Finales Sounddesign | Implementierung im Spiel | 8h | 5h | Teilw. |

| UI-Grundlagen in Godot | Einarbeitung zur UI-Arbeit | 5h | 5h | Ja |

| First Real UX | Erste richtige Benutzeroberfläche für das Playtesting gebaut | 8h | 12h | Ja |

| Gesamt | 30h | 41h |

¶ Design Aufgaben (Navid)

| Aufgabe | Beschreibung | Geschätzte Zeit | Tatsächliche Zeit | Fertig |

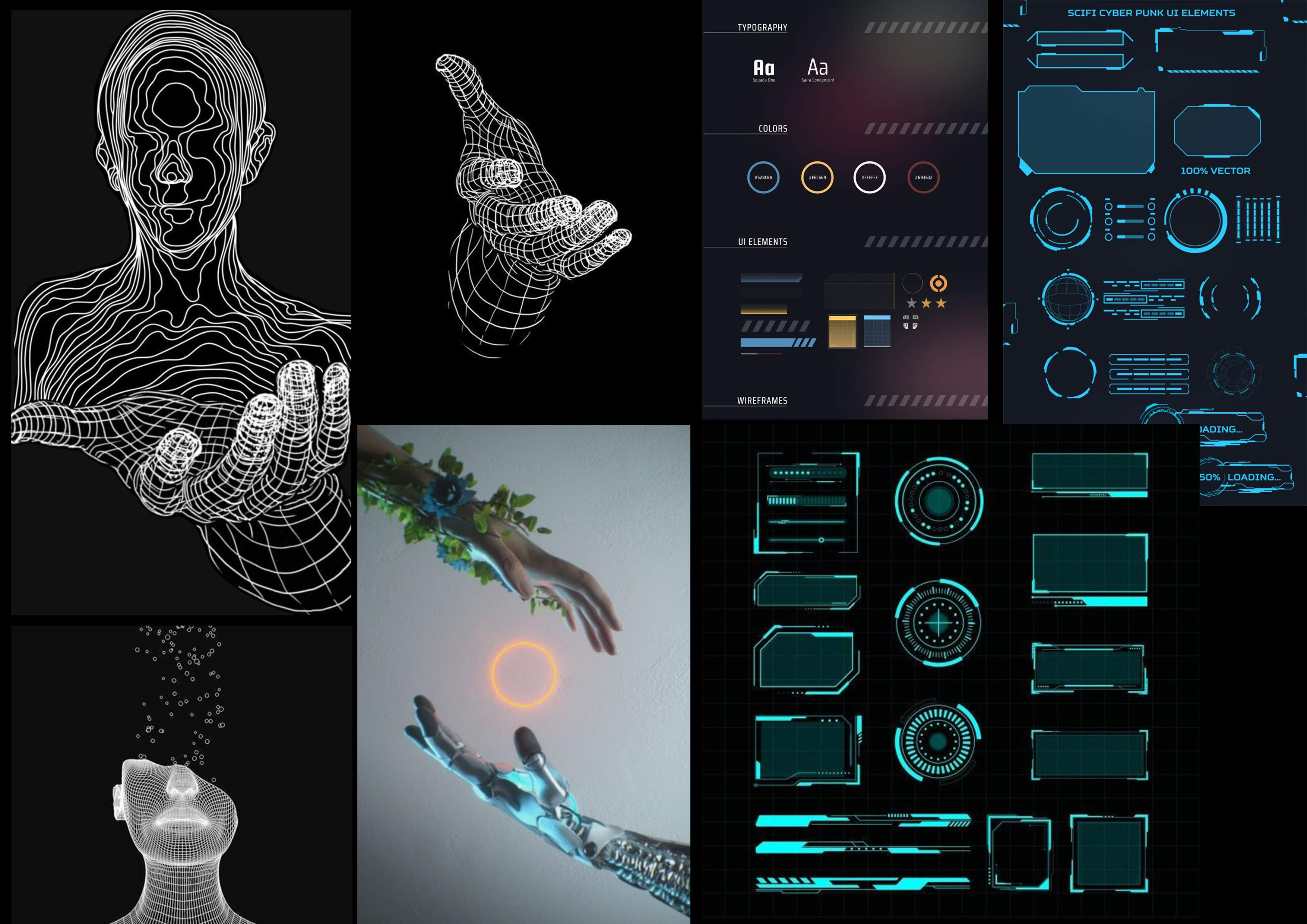

| Moodboard / Artstyle | Erstellung eines allgemeinen Moodboards zur Definition des visuellen Stils | 1h | 3h | Ja |

| UI/UX Design | Gestaltung des Hauptmenüs, Ingame, visuelle Struktur | 10h | 18h | Ja |

| Logo & Icon-Erstellung | Gestaltung des Spieltitels „Artificial Human“, Options-Icon, Transparenz-Export | 1h | 2h | Ja |

| Visual Style Definition | Entwicklung des Linienstils & Bildsprache (Minimal, Neon, Tech-inspiriert) | 10h | 5h | Ja |

| Charakterdesign | Gestaltung der KI-Charaktere (Monroe, Ares, Hitler, Basecharakter) im Linienstil | 2h | 2h | Ja |

| Animationen & Sprite Sheets | Erstellung von Frame-Animationen (Atmung etc.), Export als PNG & Sprite Sheet | 5h | 4h | Ja |

| Design-Abstimmung mit Team | Feedback-Integration, Visual Consistency mit Game-Loop & Story | 5h | 8h | Ja |

| Export für verschiedene Designelemnte | Prüfung der Grafiken | 1h | 3h | Ja |

| Visuelle Konsistenzkontrolle | Sicherstellen, dass UI-Elemente, Farben & Stil durchgehend konsistent sind | 2h | 4h | ja |

| Erstellung von Präsentationsmaterial | Doku aufbereiten | 4h | 6h | ja |

| Gesamt | 33h | 39h | ja |

¶ Herausforderungen

Godot UI-Strukturierung: Texte, Sprites, Buttons sauber auszurichten ist komplex.

Sprite Export & Aufbau: Fehleranfälligkeit beim Export gleichmäßiger Sprite Sheets.

Sound-Synchronität: In Godot ist Timing mit Audio häufig fehleranfällig.

Fehlendes Visual Feedback: Entscheidungen sichtbar zu machen, ohne viel Animation.

Erzählerische Komplexität: Spieler sollen KI nicht als allmächtig wahrnehmen, sondern ihre Schwächen selbst entdecken.

¶ Lessons Learned

- Mehr Zeit hätte in die Optimierung der Implementierung fließen müssen (v. a. UI und Sprite-System)

- Regelmäßige Teammeetings wären hilfreich gewesen, um frühzeitig Synergien zwischen Design, Sound und Technik zu schaffen

- Die Story-Komplexität und das Spannungsfeld von Kontrolle und Kontrollverlust der KI war erzählerisch schwieriger umzusetzen als gedacht

- Künftig sollten visuelle und funktionale Komponenten parallel getestet und optimiert werden

¶ Design

¶ User Interface Elements:

Cursor:

Button YES (Hover):

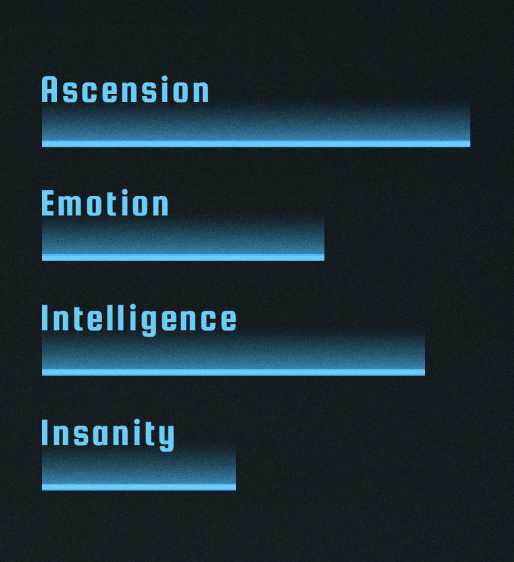

Stats:

¶ Charakterdesigns:

¶ Ingame Screenshot:

¶ Inspo

¶ Gameplay Video

¶ Links

Game: https://jimgr.itch.io/artificial-human

Passwort: haw_2025

Doku: /artificial_human_-_dokumentation.pdf

¶ Schriftart

https://fonts.google.com/specimen/Share+Tech+Mono